Regular readers will know that at in part 3 of the Workaround ESXi CPU Unsupported Error series (Check them out), during my very limited testing I found that whilst my Dell R710 home server was booting and running ESXi 7.0 quite happily; with just the one exception…. No datastores.

Regular readers will know that at in part 3 of the Workaround ESXi CPU Unsupported Error series (Check them out), during my very limited testing I found that whilst my Dell R710 home server was booting and running ESXi 7.0 quite happily; with just the one exception…. No datastores.

My Dell PERC H700 RAID controller had gone AWOL under ESXi 7.0.

Update: Since writing this post things have moved on. Checkout this post for the new developments!

In this post, I’ll try to address that. First off the standard disclaimer applies:

- Proceed at your own risk

- What follows is totally unsupported

- You alone are responsible for the servers in your care

- These modifications should NOT be made on production systems

Also - whilst I’ve tested what follows in a VM,

I HAVE NOT YET TESTED WITH REAL HARDWARE!

Overview

VMKlinux Driver Stack Deprecation

After some research, I found this post on the VMware blogs site: What is the Impact of the VMKlinux Driver Stack Deprecation?

As you can guess from that title, from v7.0 onwards, ESXi will no longer support VMKlinux based drivers.

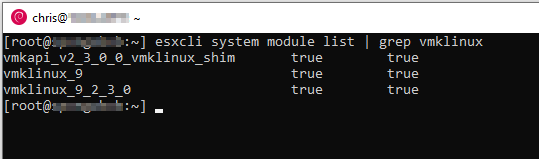

Hmm, OK. I kind of know the answer already, but lets see. My R710 is currently running ESXi 6.7U3, so lets run the suggested test:

Yeah, no surprises there. We did the testing the hard way anyway. It’s the H700 that’s using a VMKlinux driver.

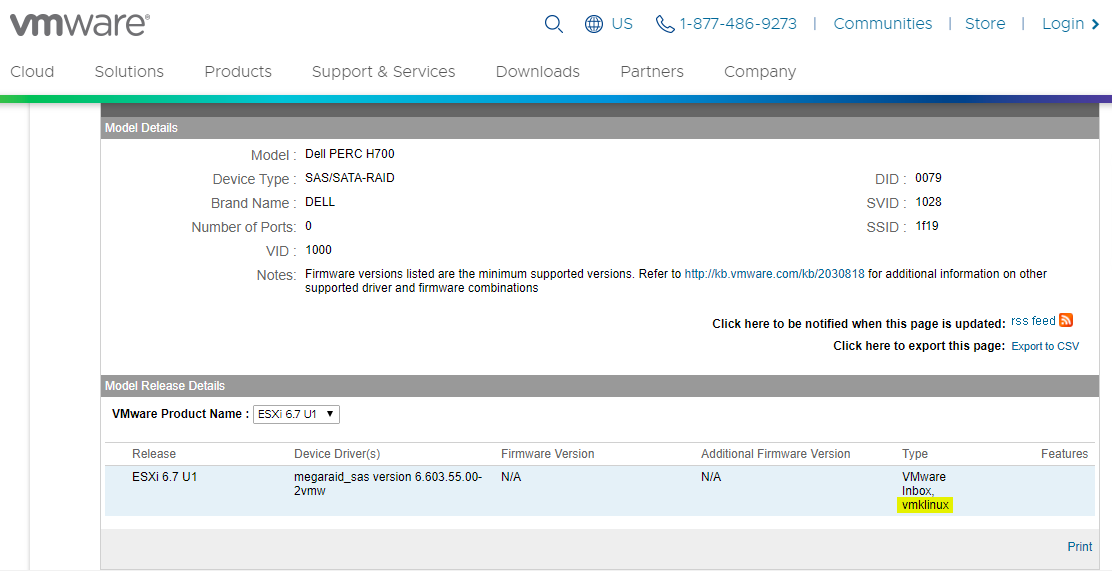

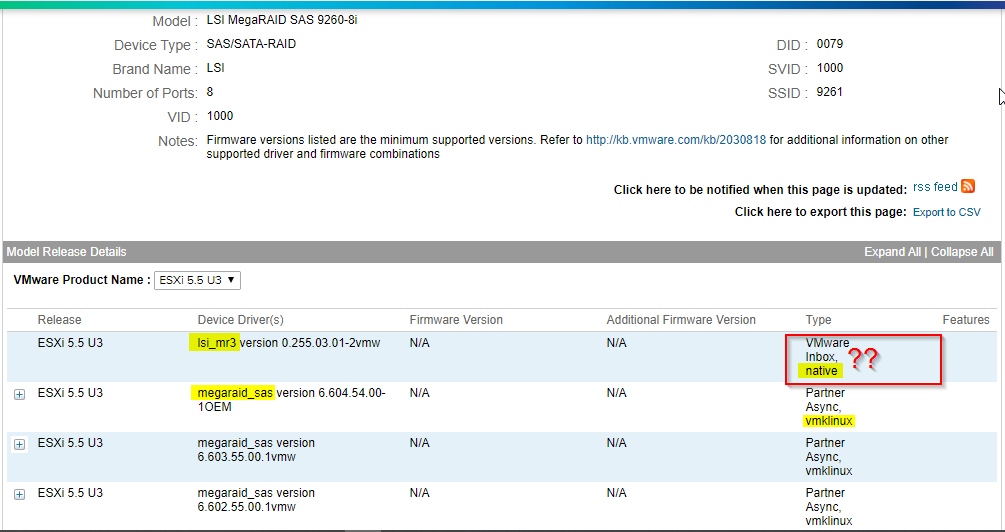

We can confirm that by looking in the VMware Compatibility Guide (VCG):

Time to do some reading…

Dell PERC H700 RAID Controller - Tell Me More

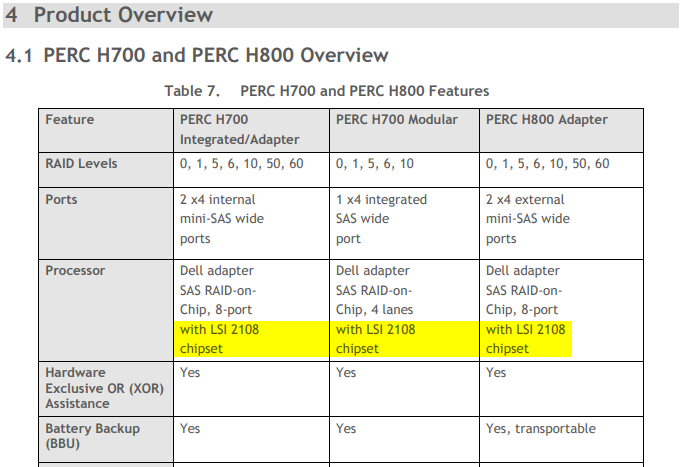

According to Dell’s own documentation the PERC Technical Guidebook lists the H700 and H800 adapters as based on the LSI 2108 chipset:

We know that LSI don’t just make chipsets for Dell. What other RAID controller cards use the LSI 2108 chipset?

- LSI MegaRAID SAS 9260 / 9260DE - pdf

- Lenovo / IBM ServeRAID M5015 / M5014 - pdf

- Intel RS2BL080 / RS2MB044 - pdf

- Fujitsu S26361-D2616-Ax / S26361-D3016-Ax - pdf

- Cisco C200 / C460 / B440 Servers - pdf

…to name just a few.

I even found the LSI 2015 Product Guide that contains something like thirteen LSI 2108 chipset based cards if you fancy spending a couple of hours on VMware Compatibility Guide!!

Clutching at Straws

Checking the VCG with the first five above, straight away I noticed something:

Hmmm, so back in the ESXi 5.5 days, LSI 2108 based cards used the native lsi_mr3 driver…!?! Check out the above VCG entry for the LSI MegaRAID SAS 9260-8i for yourself HERE

Wait, when did the VMKlinux Driver Stack Deprecation start? ESXi5.5!

Adding H700 Support Back to lsi_mr3

Many modern operating systems use PCI card hardware identifiers (aka PCI IDs) to identify and to ensure that the correct driver is loaded for the hardware present. Taking the H700 for example, we can see from the VMware VCG (after pulling all the entries together):

Vendor ID (VID) = 1000

Device ID (DID) = 0079

SubVendor ID (SVID) = 1028

SubDevice ID (SDID) = 1f16 (Dell PERC H700 Adapter)

SubDevice ID (SDID) = 1f17 (Dell PERC H700 Integrated)

SubDevice ID (SDID) = 1f18 (Dell PERC H700 Modular)

After lots and lots of reading, a bit more reading and a bit of testing in a VM, it looks like VMware drivers potentially reference PCI hardware IDs located in two files for each driver present in the O/S. These files are driver.map and driver.ids.

What happens if we add the PCI ID of the Dell H700 to the list of IDs supported by the lsi_mr3 driver? After all, if the VCG is anything to go by, the lsi_mr3 driver used to support LSI 2108 based cards…

So using my ESXi 7 VM created earlier, lets have a play. As ESXi runs from memory, we need to extract lsi_mr3.v00, make the required modifications and repackage the modified files back into lsi_mr3.v00. Finally reboot to load the modified driver. Follows is the process to extract, modify and repackage the lsi_mr3.v00 driver.

In this example we are using a datastore called “datastaore1” yours maybe named differently. I’ve possibly gone a bit overboard with the following instructions, but hey, better to much than to little.

- Enable SSH on your ESXi host

- Connect with ssh client (eg putty)

- Copy the compressed driver to somewhere where we can work on it:

cp /tardisks/lsi_mr3.v00 /vmfs/volumes/datastore1/s.tar - Move to to datastore:

cd /vmfs/volumes/datastore1/ - Extract one:

vmtar -x s.tar -o output.tar - Clean up:

rm s.tar - Create a temp working directory:

mkdir tmp - Move our working file to the temp directory:

mv output.tar tmp/output.tar - Move the the temp directory:

cd tmp - Extract two:

tar xf output.tar - Clean up:

rm output.tar - Edit the map file:

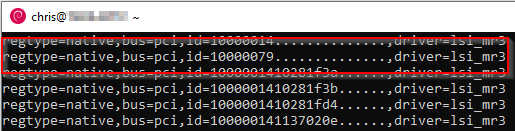

vi /vmfs/volumes/datastore1/tmp/etc/vmware/default.map.d/lsi_mr3.map

See KB1020302 for help with vi - Paste in the following:

regtype=native,bus=pci,id=10000079..............,driver=lsi_mr3

Like this:

- Save and quit vi

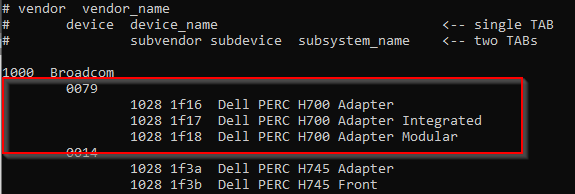

- Next, edit the ids file:

vi /vmfs/volumes/datastore1/tmp/usr/share/hwdata/default.pciids.d/lsi_mr3.ids - Paste in the following in to the “Broadcom” block, conforming the tab formatting detailed at the top of the file:

0079

1028 1f16 Dell PERC H700 Adapter

1028 1f17 Dell PERC H700 Adapter Integrated

1028 1f18 Dell PERC H700 Adapter Modular

- Save and quit vi

- Back in the tmp directory, compress one:

tar -cf /vmfs/volumes/datastore1/FILE.tar * - Change to root of datastore:

cd /vmfs/volumes/datastore1/ - Compress two:

vmtar -c FILE.tar -o output.vtar

(ignore the “not a valid exec file” error) - Compress three:

gzip output.vtar - Rename file:

mv output.vtar.gz lsi_mr3.v00 - Clean up:

rm FILE.tar - Finally copy modified lsi_mr3.v00 back to boot bank:

cp /vmfs/volumes/datastore1/lsi_mr3.v00 /bootbank/lsi_mr3.v00

Phew!!!

Steps from above without comments (easier to read):

cp /tardisks/lsi_mr3.v00 /vmfs/volumes/datastore1/s.tar

cd /vmfs/volumes/datastore1/

vmtar -x s.tar -o output.tar

rm s.tar

mkdir tmp

mv output.tar tmp/output.tar

cd tmp

tar xf output.tar

rm output.tar

vi /vmfs/volumes/datastore1/tmp/etc/vmware/default.map.d/lsi_mr3.map

Paste in the following:

regtype=native,bus=pci,id=10000079..............,driver=lsi_mr3

vi /vmfs/volumes/datastore1/tmp/usr/share/hwdata/default.pciids.d/lsi_mr3.ids

Paste in the following in to the "Broadcom" block:

0079

1028 1f16 Dell PERC H700 Adapter

1028 1f17 Dell PERC H700 Adapter Integrated

1028 1f18 Dell PERC H700 Adapter Modular

tar -cf /vmfs/volumes/datastore1/FILE.tar *

cd /vmfs/volumes/datastore1/

vmtar -c FILE.tar -o output.vtar

(ignore the "not a valid exec file" error)

gzip output.vtar

mv output.vtar.gz lsi_mr3.v00

rm FILE.tar

cp /vmfs/volumes/datastore1/lsi_mr3.v00 /bootbank/lsi_mr3.v00Finally…. Reboot!

Testing

….aaaaand this is as far as I’ve got. As I said above, whilst I’ve confirmed my ESXi 7.0 install with the modified lsi_mr3 driver still boots in as a VM, I’ve not yet been able to test this on my R710 server.

Am I confident that this will work?

Realistically,I’d give it about a 30% chance of working. After all, drivers change; functionality is added and removed all the time.

Whilst we are all in isolation thanks to COVID-19, what’s the harm in trying right? It’ll either work or it won’t!

Hopefully I can test soon.

In the meantime, if you fancy having a go at the above on your LSI 2108 based adapter, please be my guest. Just remember to use the PCIDs that match your hardware! ![]()

Update: Since writing this post things have moved on. Checkout this post for the new developments!

-Chris